The Short Answer: No.

While Web3 promises to offer an unprecedented degree of independence to content-sharing platforms, the issue of on-chain moderation continues to loom large.

Preserving freedom of speech is essential; however, there might be several circumstances where the boundaries of civil discourse are overstepped, making these platforms less safe for all participants.

But where do we draw the line between content moderation and censorship?

What Does Web3 Represent?

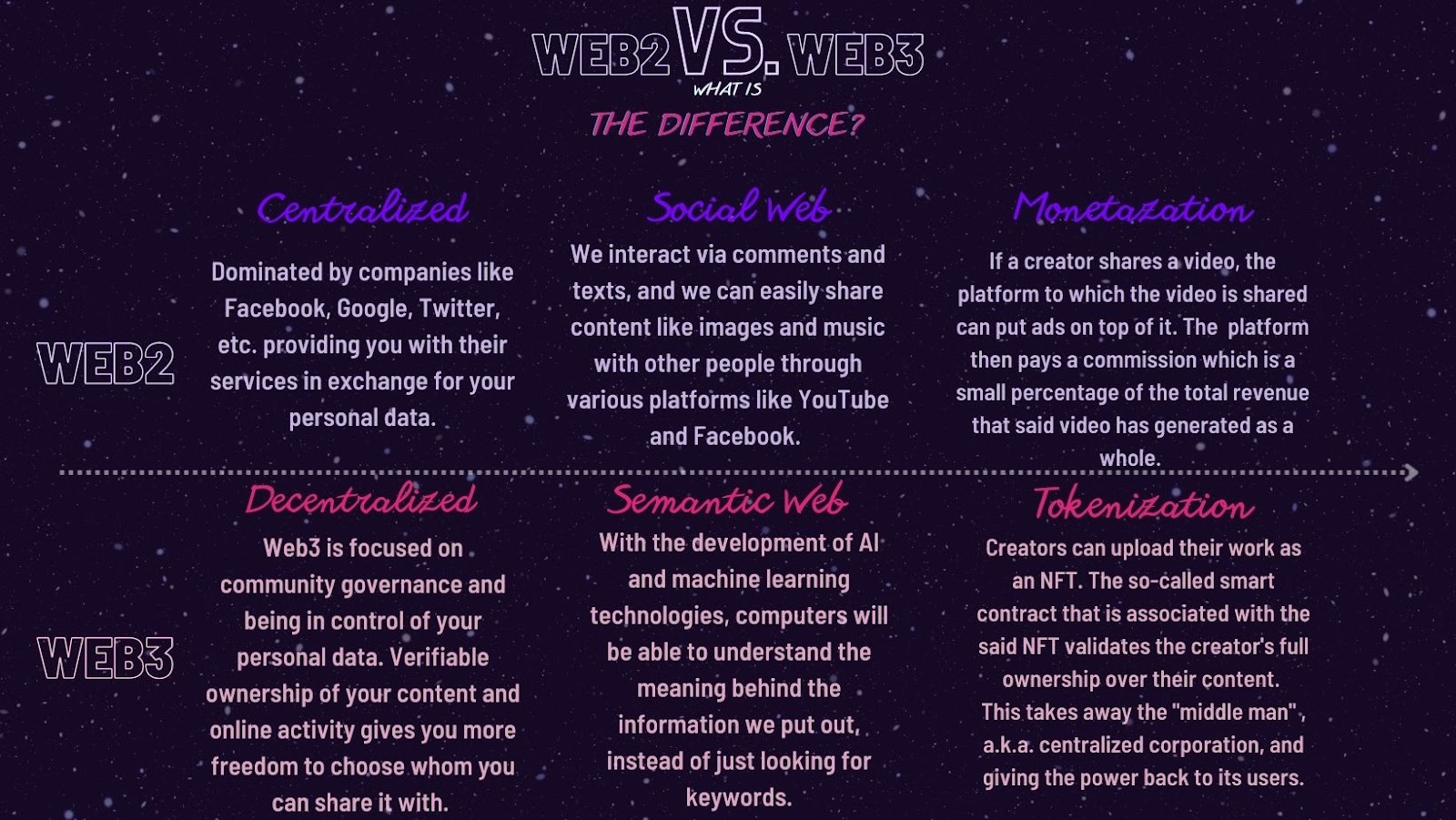

The next phase of the internet will allow people to interact with each other directly – without intermediaries. This means no more middlemen taking a cut of payment transactions or subjecting users to unnecessary requirements in order to consume their services.

Web3 is the natural expansion of the internet. What sets it apart from Web2 is the fact its applications are built on top of blockchain ecosystems, instead of corporate-owned servers. Its future development, and means of control, stand firmly in the hands of its communities.

See also: What is Web3 And How it Affects Digital Marketing?

This is Both Exciting and Concerning

The Web3 world gives us more freedom of speech in every regard – whether we are using social media, building a website, giving online reviews, etc. However, this newly gained freedom opens new paths of expression, where malevolent content and hate speech can spread without any control or consequences.

Why is Content Moderation Important?

Content moderation is essential to the viability of online communities. By ensuring that inappropriate material is not being posted, the community is kept safe for everyone. To this point, there will always be a need to regulate content that is posted online–even on Web3 platforms. The ability to put out anything on a Web3-based site sounds good in theory; however, intelligent moderation is required in practice.

I’ve often had discussions with people asking this question: If a fundamental tenet of Web3 is the ability to freely share one’s thoughts, free from corporate rules and regulations, why is moderation necessary?

The question makes sense. But if we drop all control into the hands of good intentions and wishful thinking, social discourse will inevitably reach the lowest common denominator.

Proper content moderation prevents social media platforms from becoming magnets for bad behavior, which can include:

- Hate speech

- Incitement to violence, both online and offline

- Spamming or bot-generated content

- Copyright infringement

- Phishing, scamming and spoofing

Decentralized Concerns Call for Decentralized Measures.

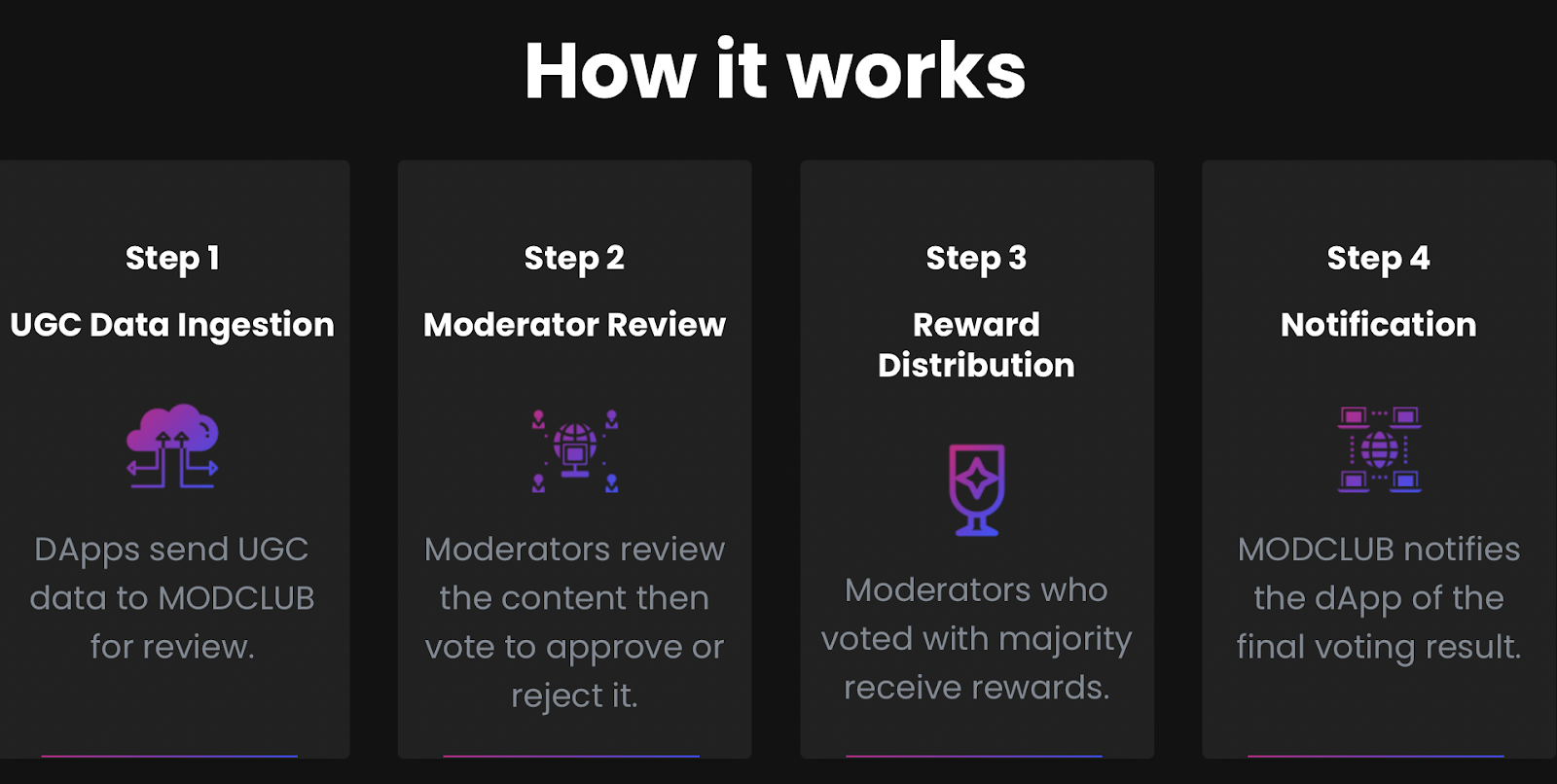

There is already a dApp with decentralized content moderation functionality and proof of humanity verification. It’s called MODCLUB, and it is fully operational on the Internet Computer blockchain. This dApp is based on the idea that Web3 content moderation should rely on community governance.

Their concept is simple: If there is a platform that needs (user-generated) content moderation, they can provide MODCLUB with the base rules and qualifications around all content they wish to have evaluated. MODCLUB’s community of moderators will review and vote on whether or not something is appropriate for the platform.

Here is something to get excited about: anyone can become a content moderator by simply applying on their website, getting verified, and choosing what kind of posts and articles they would like to moderate. To prevent unfair and false voting, MODCLUB has introduced a reward system for all participants who vote “correct” (i.e. they vote with the majority) on the presented topics.

Here’s where you can find more information on MODCLUB:

*Originally published on fastblocks.com